Independent of the size of a company or enterprise, everyone has to expect becoming a target of cyber attacks. Many attacks are not aimed at a specific target, but happen randomly and automated. Upon deploying a new server for the provisioning of our own vulnerability database, we noticed that already in the first 20 hours of online time, almost 800 requests could be logged on the webserver. In this article we want to dissect which origin these requests have and illustrate that attackers target far more than well-known systems and companies these days. In addition, we give practical advice, how to protect your own system against these attacks.

Legitimate requests to the vulnerability database (37%)

In a first step we want to filter all requests from our log file that constitue valid queries to our vulnerability database (the majority of which were executed in test cases). We do this by filtering all known source IP addresses, as well as regular requests to known API endpoints. The vulnerability database provides the following API endpoints for the retrieval of vulnerability data:

- /api/status

- /api/import

- /api/query_cve

- /api/query_cpe

- /api/index_management

After a first evaluation, we observed that 269 of 724 requests were legitimate requests to the vulnerability database:

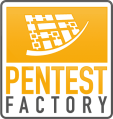

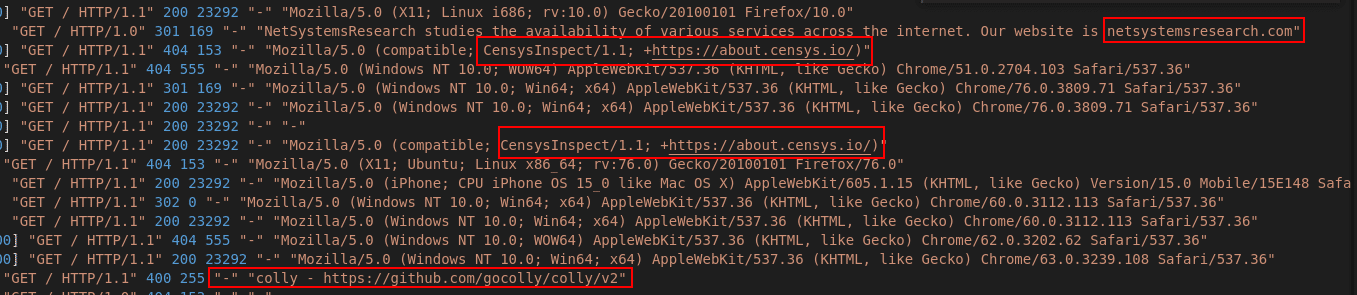

Figure 1: Sample of legitimate requests to the webserver

Figure 1: Sample of legitimate requests to the webserver

But which origin do the remaining 455 requests have?

Directory enumeration of administrative database backends (14%)

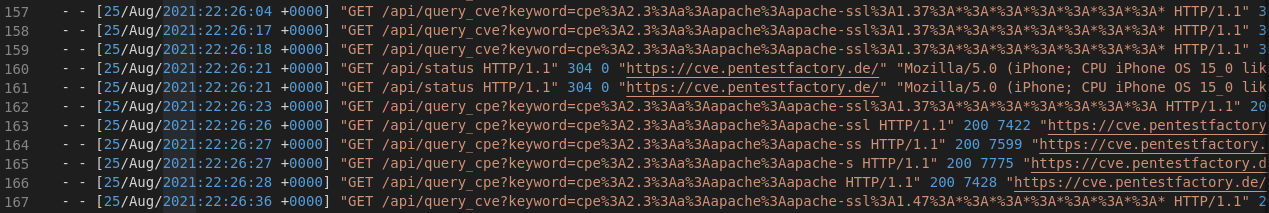

A single IP address was particularly persistent: With 101 requests an attacker attempted to enumerate various backends for database administration:

Figure 2: Directory scanning to find database backends

Vulnerability scans from unknown sources (14%)

Furthermore we could identify 102 requests, where our attempts to associate the source IPs with domains or specific organisations (e.g., using nslookup, user-agent) were unsuccessful. The 102 requests originate from 5 different IP addresses or subnets. This means around 20 requests per scan were executed.

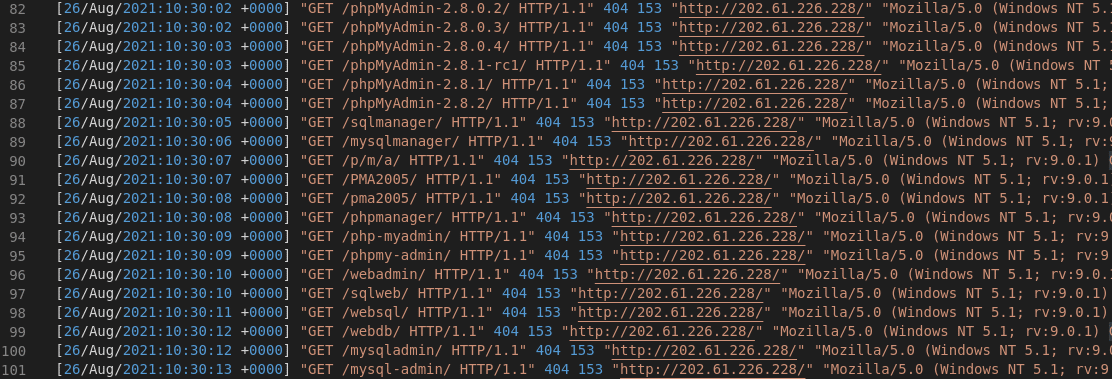

Figure 3: Various vulnerability scans with unknown origin

Enumerated components were:

- boaform Admin Interface (8 requests)

- /api/jsonws/invoke: Liferay CMS Remote Code Execution and other exploits

Requests to / (11,5%)

Overall, we could identify 83 requests that requested the index file of the webserver. This allows to identify, whether a webserver is online and to observe which service is initially returned.

Figure 4: Index-requests of various sources

We could identify various providers and tools that have checked our webserver for its availability:

- censys.io

- netsystemsresearch.com

- leakix.net

- zmap/zgrab (Scanner)

- colly (Scanner-Framework)

Vulnerability scans from leakix.net (9%)

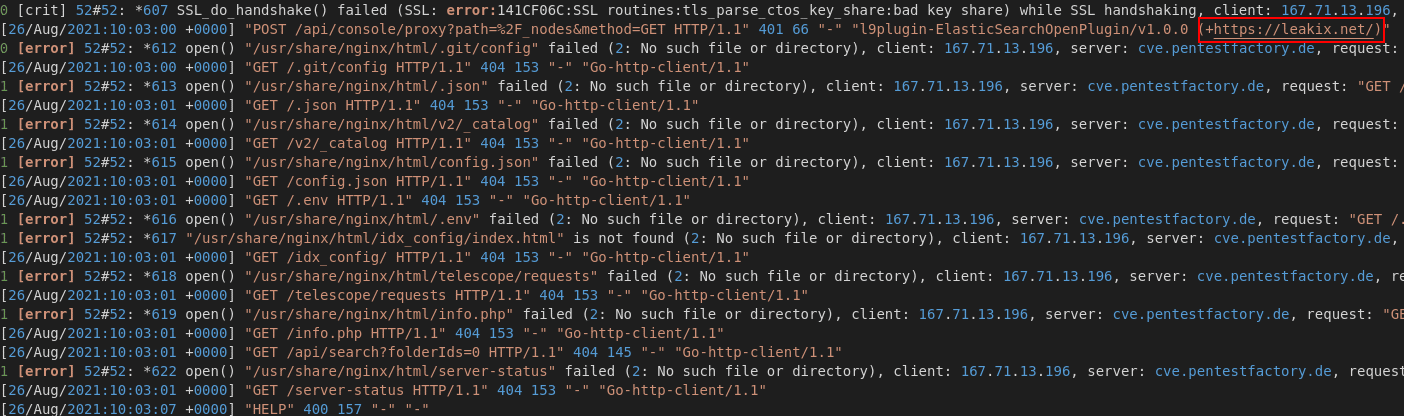

During our evaluation of the log file we could identify further 65 requests that originate from two IP addresses, using a user agent of “leakix.net”:

Figure 5: Vulnerability scan of leakix.net

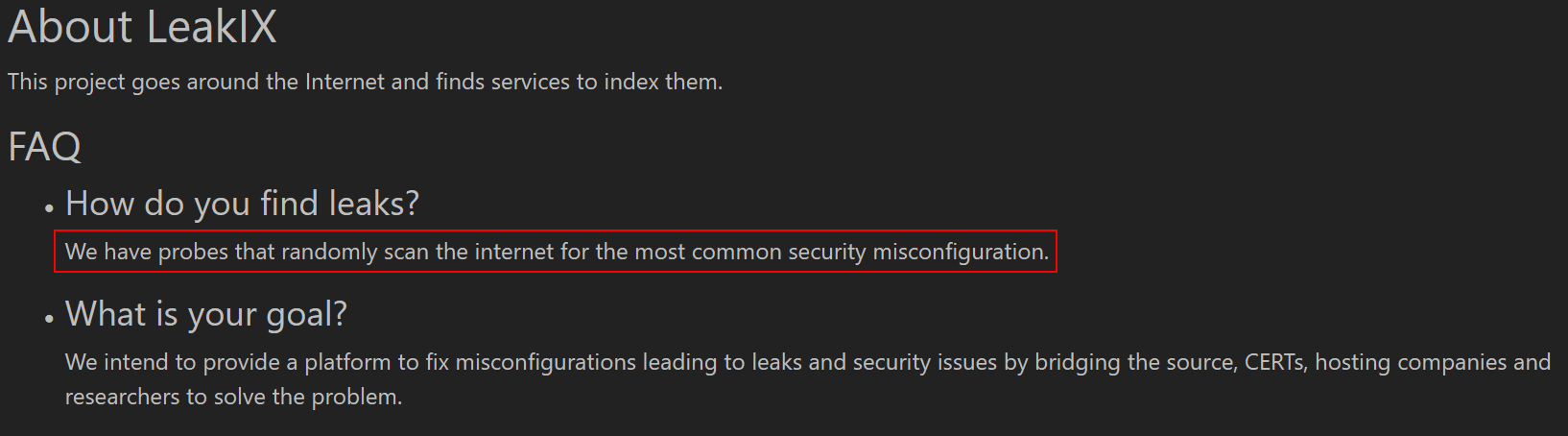

The page itself explains that the service scans the entire Internet randomly for known vulnerabilities:

Figure 6: leakix.net – About

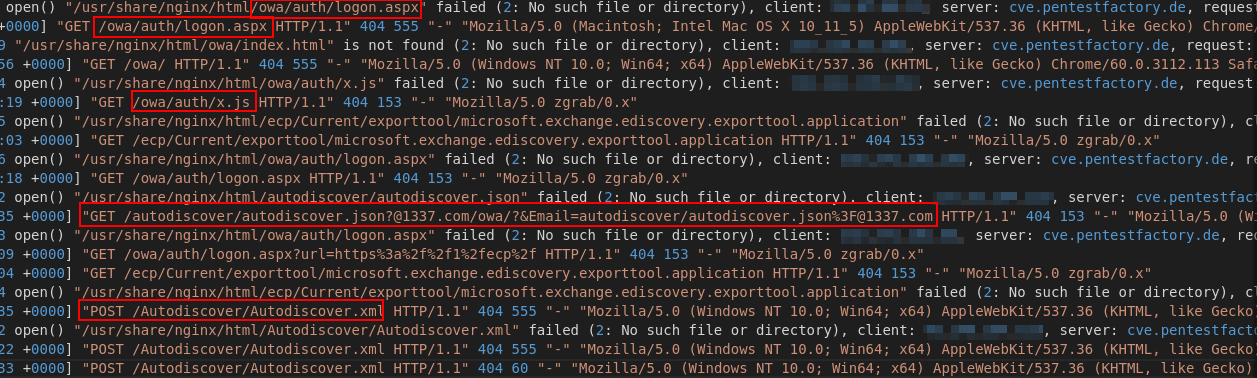

HAFNIUM Exchange Exploits (2,8%)

Furthermore we could identify 20 requests that attempted to detect or exploit parts of the HAFNIUM Exchange vulnerabilities. (Common IOCs can be found under https://i.blackhat.com/USA21/Wednesday-Handouts/us-21-ProxyLogon-Is-Just-The-Tip-Of-The-Iceberg-A-New-Attack-Surface-On-Microsoft-Exchange-Server.pdf):

- autodiscover.xml: Attempt to obtain the administrator account ID of the Exchange server

- \owa\auth\: Folder that shells are uploaded into post-compromise to establish a backdoor to the system

Figure 7: Attempted exploitation of HAFNIUM/Proxylogon Exchange vulnerabilities

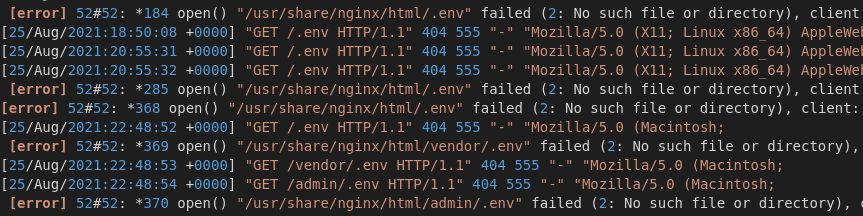

NGINX .env Sensitive Information Disclosure of Server Variables (1,5%)

11 requests have attempted to read a .env file in the root directory of the webserver. Should this file exist and be accessible it is likely to contain sensitive environment variables (such as passwords).

Figure 8: Attempts to read a .env file

Remaining Requests (10,2%)

Further 58 requests were not part of larger scanning activities and have enumerated single vulnerabilities:

- Server-Side Request Forgery attempts: 12 requests

- CVE-2020-25078: D-Link IP Camera Admin Passwort Exploit: 9 requests

- Hexcoded Exploits/Payloads: 5 requests

- Spring Boot: Actuator Endpoint for reading (sensitive) server information: 3 requests

- Netgear Router DGN1000/DGN2200: Remote Code Execution Exploit: 2 requests

- Open Proxy CONNECT: 1 request

- Various single exploits or vulnerability checks: 27 requests

Furthermore the following harmless data was queried:

- favicon.ico – Bookmark graphic: 7 requests

- robots.txt – file for search engine indexing: 9 requests

Conclusion

Using tools like zmap, attackers are able to scan the entire Internet in less than 5 minutes (see https://www.usenix.org/system/files/conference/woot14/woot14-adrian.pdf). The listed statistics have shown that IT systems are an immediate target of automated attacks and vulnerability scans, as soon as they are available on the Internet.The size of a company or the degree of familiarity are irrelevant, since attackers are able to scan the entire Internet for vulnerable hosts and oftentimes cover the entire IPv4 address range. Even using common infrastructural components like reverse proxies or load balancers to hide applications behind specific hostnames can be targeted. A secret or special hostname is not hidden, like oftentimes assumed, and does not protect from unauthorized access. Already with the retrieval of SSL certificates for your services and applications, hostnames are logged in so called SSL transparency logs. These are publicly available. This similarly allows automated tools to conduct attacks, since hostnames can be queried using services like crt.sh. Further information regarding this topic can be found in our articleSubdomains under the hood: SSL Transparency Logs .

The implementation of access controls and hardening measures thus has to be done before your services and applications are exposed to the Internet. As soon as an IT system is reachable on the Internet, you have to expect active attacks that may succeed in the worst case.

Recommendation

Expose only required network services publicly

When you publish IT systems on the public Internet, you should only expose services that are required for the business purpose. Running a web application or service based on the HTTP(S) protocol, this usually means port 443 TCP is required.

Refrain from exposing the entire host (all available network services) on the Internet.

Network separation

Implement a demilitarized zone (DMZ) using firewalls to achieve an additional layer of network separation between the public Internet and your internal IT infrastructure. Place all infrastructure components that you want to expose on the Internet in the designated DMZ. Further information can be found in the IT baseline of the BSI.

Patch-Management and Inventory Creation

Keep all your software components up to date and implement a patch management process. Create an inventory of all IT infrastructure components, listing all used software versions, virtual hostnames, SSL certificate expiration dates, configuration settings, etc.

Further information can be found under: http://www.windowsecurity.com/uplarticle/Patch_Management/ASG_Patch_Mgmt-Ch2-Best_Practices.pdf

Hardening measures

Harden all exposed network services and IT systems according to the best-practices of the vendor or hardening measures of the Center for Internet Security (CIS). Change all default passwords or simple login credentials that may still exist from the development period and configure your systems for productive use. This includes the deactivation of debug features or testing endpoints. Implement all recommended HTTP-Response-Headers and harden the configuration of your webservers. Ensure that sensitive cookies have the Secure, HttpOnly and SameSite flags set.

Transport encryption

Offer your network services via an encrypted communication channel. This ensures the confidentiality and integrity of your data and allows clients to verify the authenticity of the server. Refrain from using outdated algorithms like RC4, DES, 3DES, MD2, MD4, MD5 or SHA1. Employ SSL certificates that are issued from a trustworthy certification authority, e.g., Let’s Encrypt. Keep these certificates up to date and renew them in time. Use a single, unique SSL certificate per application (service) and set the correct domain name in the Common Name field of the certificate. Using SSL wildcard certificates is only necessary in rare cases and not recommended.

Access controls and additional security solutions

Limit access to your network services, in case they are not publicly available on the Internet. It may make sense to implement an IP whitelisting, which limits connections to a trustworthy pool of static IPv4 addresses. Configure this behavior either in your firewall solution or directly within the deployed network service, if possible. Alternatively you can also use SSL client certificates or Basic-Authentication.

- Nginx Webserver: https://docs.nginx.com/nginx/admin-guide/security-controls/controlling-access-proxied-tcp/

- Apache Webserver: https://httpd.apache.org/docs/2.4/howto/access.html

- SSH: https://unix.stackexchange.com/a/406264

Implement additional security solutions for your network services like Intrusion Prevention Systems (IPS) or a Web Application Firewall (WAF), to have advanced protection against potential attacks. For IPS we can reommend the open source solution Fail2ban. As a WAF, ModSecurity with the known OWASP Core Rule Set can be set up.

Fail2ban is an IPS written in Python, which identifies suspicious activity based on log entries and regex filters and allows to set up automatical defense actions. It is for instance possible to recognized automated vulnerability scans, brute-force attacks or bot-based requests and block attackers using IPtables. Fail2ban ist open source and can be used freely.

- Installation of Fail2ban

- Fail2ban can usually be installed using the native packet manager of your Linux distribution. The following command is usually sufficient:

sudo apt update && sudo apt install fail2ban

-

- Afterwards the Fail2ban service should have started automatically. Verify succesful startup using the following command:

sudo systemctl status fail2ban

- Configuration of Fail2ban

- After the installation of Fail2ban, a new directory /etc/fail2ban/ is available, which holds all relevant configuration files. By default, two configuration files are provided:/etc/fail2ban/jail.conf and /etc/fail2ban/jail.d/defaults-debian.conf. They should however not be edited, since they may be overriden with the next package update.

- Instead you should create specific configuration files with the .local file extension. Configuration files with this extension will override directives from the .conf files. The easiest configuration method for most users is copying over the supplied jail.conf tojail.local and then editing the .local file for desired changes. The .local file only needs to hold entries that shall override the default config.

- Fail2ban for SSH

- After the installation of Fail2ban, a default guard is active for the SSH service on TCP port 22. Should you use a different port for your SSH service, you have to adapt the configuration setting port in your jail.local file. Here you can also adapt important directives like findtime, bantime and maxretry, should you require a more specific configuration. Should you not require this protection, you can disable it by setting the directive enabled to false. Further information can be found under: https://wiki.ubuntuusers.de/fail2ban/

- Fail2ban for web services

-

- Furthermore, Fail2ban can be set up to protect against automated web attacks. You may, for instance, recognize attacks that try to enumerate web directories (Forceful Browsing) or known requests associated with vulnerability scans and block them.

-

- The community provides dedicated configuration files, which can be used freely:

-

-

- https://github.com/fail2ban/fail2ban/blob/master/config/filter.d/apache-botsearch.conf

- https://github.com/fail2ban/fail2ban/blob/master/config/filter.d/apache-badbots.conf

- https://github.com/fail2ban/fail2ban/blob/master/config/filter.d/nginx-botsearch.conf

- https://gist.github.com/dale3h/660fe549df8232d1902f338e6d3b39ed#file-nginx-badbots-conf

-

-

- Store these exemplary filter configurations in the directory /etc/fail2ban/filter.d/ and configure a new jail in your jail.local file. In the following we provide an example.

- Blocking search requests from bots

- Automated bots and vulnerability scanners continuously crawl the entire Internet to identify vulnerable hosts and execute exploits. Oftentimes, known tools are used, whose signature can be identified in the User-Agent HTTP-Header. Using this header, many simple bot attacks can be detected and blocked. Attackers may change this header, which leaves more advanced attacks undetected. The Fail2ban filters *badbots.conf are mainly based on the “User-Agent” header.

-

- Alternatively, it is also possible to block all requests that follow a typical attack pattern. This includes automated requests, which continuously attempt to identify files or directories on the web server. Since this type of attack requests several file and directory names at random, the probability of many requests resulting in a 404 Not Found error message is relatively high. Analysing these error messages and the associated log files, Fail2ban is able to recognize attacks and ban attacker systems early on.

-

- Example: Nginx web server:

1. Store the following file under /etc/fail2ban/filter.d/nginx-botsearch.conf

https://github.com/fail2ban/fail2ban/blob/master/config/filter.d/nginx-botsearch.conf

2. Add configuration settings to your /etc/fail2ban/jail.local:

[nginx-botsearch]

ignoreip = 127.0.0.0/8 10.0.0.0/8 172.16.0.0/12 192.168.0.0/16

enabled = true

port = http,https

filter = nginx-botsearch

logpath = /var/log/nginx/access.log

bantime = 604800 # Bann für 1 Woche

maxretry = 10 # Bann bei 10 Fehlermeldungen

findtime = 60 # zurücksetzen von maxretry nach 1 Minute

3. If necessary, include further trustworthy IP addresses of your company in the ignoreip field, which shall not be blocked by Fail2ban. If necessary, adapt other directives according to your needs and verify the specified port number of the web server, as well as correct read permissions for the /var/log/nginx/access.log log file.

4. Restart the Fail2ban service

sudo systemctl restart fail2ban

Automated enumeration requests will now be banned if they generate more than ten 404 error messages within one minute. The IP address of the attacking system will be blocked for a week using IPtables and enabled again afterwards. If desired, you can also be informed about IP bans via e-mail using additional configuration settings. A Push-notification to your smartphone using a Telegram-Messenger-Bot in Fail2ban is also possible. Overall, Fail2ban is very flexible and allows unlimited banactions, like custom shell scripts, in case a filter matches.

To view already banned IP addresses the following command can be used:

- View available jails

sudo fail2ban-client status

- View banned IP address in a jail

sudo fail2ban-client status

Fail2ban offers several ways to protect your services even better. Inform yourself about additional filters and start using them, if desired. Alternatively, you can also create your own filters using regex and test them on log entries.

Premade Fail2ban filter lists can be found here: https://github.com/fail2ban/fail2ban/tree/master/config/filter.d