Summary

During our technical password audits, we were able to analyse more than 40.000 password hashes and crack more than 3/4 of them. This is mostly due to short passwords, an oudated password policy in the company, as well as frequent password reuse. Furthermore it happens that administrative accounts are not bound to the corporate password policy which allows weak passwords to be set. Issues in the onboarding process of employees may also be abused by attackers to crack additional passwords. Oftentimes a single password of a privileged user is enough to allow for a full compromise of the corporate IT infrastructure.

Task us with an Active Directory Password Audit, to increase the resilience of your company against attackers in the internal network and to verify the effectivity of your password policies. We gladly support you in identifying and remediating issues related to the handling of passwords and their respective processes.

Introduction

The Corona pandemic caused a sudden change towards home office working spaces. However, IT infrastructure components, like VPNs and remote access, were oftentimes not readily available during this shift. Many companies had to upgrade their existing solutions and retrofit their IT infrastructure.

Besides the newly aquired components, also new accounts were created for accessing company ressources over the Internet. If technically possible, companies implemented Single-Sign-On (SSO) authentification, which requires a user to login only once with their domain credentails before access is granted to various company ressources.

According to professor Christoph Meinel, the director of the Hasso-Plattner-Institute, the Corona pandemic increased the attack surface for cyber attacks greatly and created complex challenges for IT departments. [1] Due to the increase in home office work, higher global Internet usage since the start of the pandemic and an extension of IT infrastructures, threat actors have gained new, attractive targets for hacking and phishing attacks. Looking at just the DE-CIX Internet backbone node in Frankfurt, where traffic of various ISPs is accumulated, a new high of 9.1 Terabits per second was registered. This value equals a data volume of 1800 downloaded HD movies per second. A new record compared to prior peaks of 8.3 Terabits. [2]

Assume Breach

The effects of the pandemic have thus continuously increased the attack surface of companies and their employees regarding cyber attacks. Terms like „Supply Chain Attacks“ or „0-Day Vulnerabilities“ are frequently brought up in the media, which shows that many enterprises are actively attacked and compromised. Oftentimes the compromise of a single employee or IT system is enough to obtain access to internal networks of a company. Here a multitude of attacks can happen, like phishing or the exploitation of specific vulnerabilities in public IT systems.

Microsoft operates according to the „Assume Breach“ principle and expects that attackers have already gained access, rather than assuming complete security of systems can be achieved. But what happens, once an attacker is able to access a corporate network? How is it possible that the compromise of a regular employee account causes the entire network to break down? Sensitive ressources and server systems are regularly updated and are not openly accessible. Only a limited number of people have access to critical systems. Furthermore a company-wide password policy ensures that attackers cannot guess the passwords of administrators or other employees. Where is the problem?

IT-Security and Passwords

The annual statistics of the Hasso-Plattner-Institute from 2020 [3] illustrate that the most popular passwords amongst Germans are „123456“, „123456789“ or „passwort“, just like in the statistics from the prior years. This does not constitute sufficient password complexity – without even covering the reuse of passwords.

Most companies are aware of this issue and implement technical policies to prevent the use of weak passwords. Usually, group policies are applied for all employees via the Microsoft Active Directory service. Users are then forced to set passwords with a sufficient length as well as certain complexity requirements. Everyone knows phrases like “Your password has to contain at least 8 characters”. Does this imply that weak passwords are a thing of the past? Unfortunately not, since passwords like „Winter21!“ are still very weak and guessable, even though they are compliant with the company-wide password policy.

Online Attacks vs. Offline Attacks

For online services likeOutlook Web Access (OWA) or VPN portals, where a user logs on with their username and password, the likelihood of a successful attack is grearly reduced. An attacker would have to identify a valid username and subsequently guess the respective password. Furthermore solutions like account lockouts after multiple invalid login attempts, rate limiting or two factor authentication (2FA) are used. These componentes reduce the success rate of attackers considerable, since the number of guessing attempts is limited.

But even if such defensive mechanisms are not present, the attack is still executed online, by choosing a combination of a username and password and sending it to the underlying web server. Only after the login request is processed by the server, the attacker receives the response with a successful login or an error message. This Client-Server communication limits the performance of an attack, since the required time and success rate lie greatly apart. Even a simple password containing lowercase letters and umtlauts with a length of 6 characters would require 729 million attacker requests to brute force all possible password combinations. Additionally, the attacker would already need to know the username of the victim or use further guessing to find it out. By using a company-wide password policy, including the above defensive mechanisms, the probability for a successful online brute-force attack is virtually zero.

However, for offline attacks, where an attacker has typically captured or obtained a password hash, brute-forcing can be executed with a significantly higher performance. But where do these password hashes come from and why are they more prone to guessing attempts?

Password Hashes

Let us go through the following scenario: As a great car enthusiast Max M. is always looking for new offers on the car market. Thanks to the digital change, not only local car dealerships but also the Internet is available with a great variety of cars. Max gladly uses these online platforms to look out for rare deals. For the use of these services, he generally needs a user account to save his favorites and place bids. A registration via e-mail and the password “Muster1234” is quickly done. But how does a subsequent login with our new user work? As a layman, you would quickly come to the conclusion that the username and password are simply stored by the online service and compared upon logging in.

This is correct on an abstract level, the technical details are however lacking a few details. The login credentials are stored after registration in a database. The database however does not contain clear-text passwords of a user anymore, but a so called password hash. The password hash is derived by a mathmatical one-way function based on a user’s password. Instead of our password „Muster1234“ the database now contains a string like „VEhWkDYumFA7orwT4SJSam62k+l+q1ZQCJjuL2oSgog=“ and the mathematical function ensures that this kind of computation is only possible in one direction. It is thus effectively not possible to reconstruct the clear-text password from the hash. This method ensures that the web hoster or page owner cannot access their customers’ passwords in clear-text.

During login, the clear-text password of the login form is sent to the application server, which applies the same mathematical function on the entered password and subsequently compares it to the hash that is stored in the database. Should both values be equal, the correct password was entered and the user is logged in. Are the values unequal, an incorrect password was submitted and the login results in an error. There are further technical features which are implemented in modern applications such as replicated databases or the use of “salted” hashing. These are however not relevant for our exemplary scenario.

An attacker that tries to compromise the user account, has the same difficulties doing so using an online attack. The provider of the car platform may allow only three failed logins before the user account is disabled for 5 minutes. An automated attack to guess the password is thus not feasible.

Should the attacker however gain access to the underlying database (e.g. using an SQL injection vulnerability), the outlook is different. An attacker then has access to the password hash and is able to conduct offline attacks. The mathematical one-way function is publicly known and can be used to compute hashes. An attacker may thus proceed in the following order:

- Choose any input string, which represents a guessing attempt of the password.

- Input the chosen string into the mathematical one-way function and compute its hash.

- Compare the computed hash with the password hash extracted from the application’s database. Results:

- If they are equal, the clear-text password has been successfully guessed.

- If they are unequal, choose a new input string and try again.

This attack is significantly more performant than online attacks, as no network communication takes place and no server-side security mechanisms become active. However it has to be noted that modern and secure hash functions are created in a way so that hash computation for attackers becomes complex and unfeasible. This is achieved by increasing the expense of a single hash computation by the factor n, which can be ignored for a singular hash computation and comparison with the login database. For an attacker who needs a multitude of hash computations to break a hash, the expense is increased by factor n, which results in successful guessing attempts to require multiple years of processing time. By using modern hash functions like Argon2 or PBKDF2, offline attacks are similarly complex to online attacks and rather complex to realize in a timely manner.

LM- and NT-Hashes

Our scenario can be translated to many other applications, like the logon to a Windows operating system. Similarly to the account of the online car dealership, Windows allows creating users that can log on to the operating system. Whether a login requires a password can be configured individually for every user account. The password is yet again stored as a hash and not in clear-text format. Microsoft uses two algorithms to compute the hash of a user password. The older of the two is called LM hash and is based on the DES algorithm. Out out security reasons this hash type was deactivated starting from versions Windows Vista and Windows Server 2008. As an alternative the so called NT hash was introduced, which is based on the MD4 algorithm.

The password hashes are stored locally in the so called SAM database on the hard drive of the operating system. Similarly to our previous scenario, a comparison between the entered password (after generating its hash) and the password hash stored in the SAM database is done. Are both values idententical, a correct password was used and the user is logged on to the system.

In corporate environments and especially in Microsoft Active Directory networks, these hashes are not only stored locally in the SAM database, but also on a dedicated server, the domain controller, in the NTDS database file. This allows for a uniform authentication against databases, file servers and further corporate ressources using the Kerberos protocol. Furthermore this reduces the complexity within the network, since IT assets and user accounts can be managed centrally via the Active Directory controllers. Using group policies, companies can furthermore ensure that employees must set a logon password and that the password adheres to a strict password policy. Passwords may need to be renewed on a regular basis. On the basis of the account password it is also possible to implement Single-Sign-On (SSO) for a variety of company ressources, since the NT hash is stored centrally on the domain controllers. Besides the local SAM database on every machine, as well as the domain controllers of an on-premise Active Directory solution, it is also possible to synchronize NT hashes with a cloud-based domain controller (e.g., Azure). This extends the possibilities of SSO logins to cloud assets, like Office 365. The password hashes of a user are thus used in several occasions, which increases the likelhood that they may be compromised.

Access to NT hashes

To obtain access to NT hashes as an attacker, several techniques exist. Out of brevity, we only mention a selection of well-known methods in this article:

- Compromising a single workstation (e.g., using a phishing e-mail) and dumping the local SAM database of the Windows operating system (e.g., using the tool “Mimikatz”).

- Compromising a domain controller in an active directory environment (e.g., using the PrintNightmare vulnerability) and dumping the NTDS database (e.g., via Mimikatz).

- Compromising a privileged domain user account with DCSync permissions (e.g., a domain admin or enterprise admin). Extracting all NT hashes from the domain controller in an Active Directory domain.

- Compromising a privileged Azure user account with the permissions to execute an Azure AD Connect synchronization. Extracting all NT hashes from the domain controller of an Active Directory domain.

- and many more attacks…

Password cracking

After the NT hashes of a company have been compromised, they can either be used in internal “relaying” attacks or targeted in password cracking attempts to recover the clear-text password of an employee.

This is possible, since NT hashes in Active Directory environments use an outdated algorithm called MD4. This hash function was published in 1990 by Ronald Rivest and considered insecure relatively quickly. A main problem of the hash function was its missing collision resistance, which leads to different input values generating the same output hash. This undermines the main purpose of a cryptographic hash function.

Furthermore, MD4 is highly performant and does not slow down cracking attempts, as opposed to modern hash functions like Argon2. This allows attackers to execute effective offline attacks against NT hashes. A modern gaming computer with a recent graphics card model is able to compute 50-80 billion hashes per second. Cracking short or weak passwords thus becomes dead easy.

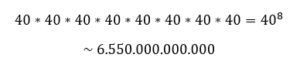

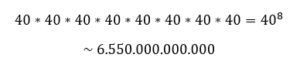

To illustrate the implications of this cracking speed, we want to analyse all possible password combinations of 8-character long passwords. To simplify this analysis, we assume that the password consists only of lowercase letters and numbers. The german alphabet contains 26 base letters, as well as three umlauts ä, ö, ü and the special letter ß. Numerical values have a range of 10 diffferent values – the digits from 0 to 9. This results in 40 possible values for every digit of our 8 character long password. This equals about 6550 billion possible combinations, using the following mathematical formula:

A gaming computer, which generates 50 billion hashes per second, would thus only require 131 seconds to test all 6550 billion possibilities in our 8 character password space. Such a password would thus be cracked in a bit more than 2 minutes. Real threat actors employ special password cracking rigs, which can compute roughly 500 – 700 billion hashes per second. These systems cost around 10.000€ to set up.

Furthermore there is a variety of cracking methods that do not aim at brute-forcing the entire keyspace (all possible passwords). This allows cracking passwords that have more than 12 characters, which would require several years for a regular keyspace brute-force.

Such techniques are:

- Dictionary lists (e.g., a German dictionary)

- Password lists (e.g., from public leaks or breaches)

- Rule based lists, combination attacks, keywalks, etc.

Pentest Factory password audit

By tasking us with an Active Directory password audit, we execute these attack scenarios. In a first step we extract all NT hashes of your employees from a domain controller without user associations. Our process is coordinated with your works council and is based on a proven process.

Afterwards we execute a real cracking attack on the extracted hashes to compute clear-text passwords. We employ several modern and realistic attack techniques and execute our attacks using a cracking rig with a hash power of 100 billion hashes per second.

After finalising the cracking phase, we create a final report that details the results of the audit. The report contains metrics regarding compromised employee passwords and lists extensive recommendations how password security can be improved in your company on the long term. Oftentimes, we are able to reveal procedural problems, e.g., with the onboarding of new employees or misconfigurations in group and password policies. Furthermore, we offer to conduct a password audit with user correlation. We have established a process that allows you as a customer to associate compromised passwords to the respective employee accounts (without our knowledge). This process is also coordinated with your works council and adheres to common data privacy regulations.

Statistics from our past password audits

Since the founding of Pentest Factory GmbH in 2019, we could conduct several password audits for our clients and improve the handling of passwords. Besides technical misconfigurations, like a password policy that was not applied uniformly, we were able to find procedural problems on several occasions. Especially the onboarding of new employees creates insecure processes that lead to weak passwords being chosen. It also occurs that administrative users can choose passwords independently of established policies. Since these users are highly privileged, weak passwords contribute to a significantly increased risk for a breach. Should an attacker be able to guess the password of an administrative user, this could result in a compromise of the entire company IT infrastructure.

To give you an insight into our work, we want to present statistics from our previously executed password audits.

Combining all performed audits, we could evaluate 32.493 unique password hashes. Including reused passwords we can count 40.288 password hashes. This means that 7795 passwords could be cracked that have been used with several user accounts at the same time. These are oftentimes passwords like „Winter2021“ or passwords that were handed out during initial onboarding and not changed. The highest password reuse we could detect was a password with around 450 accounts using the same password. This was an initialization password that had not been changed by the respective users.

Of overall 32.493 unique password hashes, we were able to crack 26.927 hashes and compute their clear-text passwords. This amounts to a percentage of over 82%. This means we were able to break more than two thirds of all employee passwords during our password audits. An alarming statistic.

This is mainly because passwords with a length less than 12 characters were used. The following figure highlights this insight.

Note: The figure does not include all cracked password lengths. Exceptions like password lengths over 20 characters or very short or even empty passwords were omitted.

Furthermore our statistics show the effects of a too weak password policy, as well as issues with applying a password policy company-wide.

Note: The below figure does not contain the password masks of all cracked passwords but only a selection.

A multitude of employee passwords were guessable, because they were based on a known password mask. Over 12.000 cracked passwords consisted of an initial string, ending with numerical values. This includes especially weak passwords like „Summer2019“ and „password1“.

These passwords are usually already a part of publicly available password lists. One of the most known password lists is called “Rockyou”. It contains more than 14 million unique passwords from a breach of the company RockYou in 2009. The company fell victim to a hacker attack and had saved all their customer passwords as clear-text in their database. The hackers were able to access this data and published the records afterwards.

On the basis of these leaks it is possible to generate statistics about the structure of user passwords. These statistics, patterns and rules for password creation can subsequently be used to break another myriad of password hashes. The use of a password manager, which creates cryptographically random and complex passwords, can prevent these rule-based attacks and make it harder for patterns to occur.

Recommendations regarding password security

Our statistics have shown that a strict and modern password policy can reduce the success rate of a cracking or guessing attack drastically. Nevertheless, password security is based on multiple factors, which we want to illustrate as follows.

Password length

Distance yourself from outdated password polices that only enforce a password length of 8 characters. The costs for modern and powerful hardware are continously decreasing, which allows even attackers with a low budget to effectively execute password cracking attacks. The continuous growth of cost-effective cloud services furthermore enables attackers to dynamically execute attacks based on a fixed budget, without having to buy hardware or set up systems.

Already a password length of 10 characters can increase the effort needed to crack a password significantly – even considering modern cracking systems. For companies that employ Microsoft Active Directory we still recommend using a minimum password length of 12 characters.

Complexity

Ensure that passwords have sufficient complexity by implementing the following minium requirements:

- The password contains at least one lowercase letter

- The password contains at least one uppercase letter

- The password contains at least a digit

- The password contains at least a special character

Regular password changes

Regular changes of passwords are not recommended by the BSI anymore, as long as the password is only accessible by authorized persons. [4]

Should the password have been compromised, which implies that it is known to an unauthorized person, it has to be ensured that the password is changed immediately. Furthermore it is recommended to regularly check public databases regarding new password leaks of your company. We gladly support you in this matter in our Cyber Security Check.

Password history

Ensure that users cannot choose passwords that they have previously used. Implement a password history that contains the last 24 used password hashes and prevents their reuse.

Employment of blacklists

Implement additional checks that prevent the use of known blacklisted words. This includes the own company name, seasons of the year, the name of clients, service owners, products or single words like “password”. Ensure that these blacklisted words are not only forbidden organisationally, but also on a technical level.

Automatic account lockout

Configure an automatic account lockout for multiple invalid logins to actively prevent online attacks. A proven guideline is locking a user account after 5 failed login attempts for 5-10 minutes. Locked accounts should be unlocked automatically after a set timespan, so regular usage continues and help desk overloads are prevented.

Sensitization

The sensitization of all employees including the management is essential to increase the security posture company-wide. Regular sensitization measures should become a part of the company’s culture so correct handling of sensitive access data is internalized.

A deliberate change in behavior is necessary in security relevant situations, e.g.,

- locking your machine, even if you leave the desk only shortly;

- locking away confidential documents;

- never forward your password to anyone;

- use secure (strong) passwords;

- do not use passwords twice or multiple times.

Despite a technically strict password policy, users might still choose weak passwords that can be guessed with ease. Only the execution of regular password audits and a sensitization of employees can prevent damage in the long term.

Use of two-factor authentication (2FA)

Configure additional security features such as two-factor authentication. This ensures that even in the event that a password guessing attempt is successful, the attacker cannot gain access to the user account (and company ressources) without a secondary token.

Regular password audits

Execute regular password audits to identify user accounts with weak or reused passwords and protect them from future attacks. A continuous re-evaluation of your company-wide password policy and further awareness seminars enable you to generate metrics on a technical level that allow you to continuously measure and improve password security in your company.

Differentiated password policies

Introduce multiple password policies based on the protection level of the respective target group. Low privileged user accounts can thus be required to choose a password with a minimum length of 12 characters including complexity requirements, while administrative user accounts have to follow a more strict policy with at least 14 characters.

Additional security features

We gladly advise you regarding additional security features in your Active Directory environment to improve password security. This includes:

- Local Administrator Password Solution (LAPS)

- Custom .DLL Password Filters

- Logging and Monitoring of Active Directory Events

Comissioning

Should we have sparked your interest in a password audit, we are looking forward to hearing from you. We gladly support you in evaluating the password security of your company, as well as making long-term improvements.

You can also use our online configurator to comission an audit.

More information regarding our password audit can be found under: https://www.pentestfactory.de/passwort-audit

Sources

[1] https://hpi.de/news/jahrgaenge/2020/die-beliebtesten-deutschen-passwoerter-2020-platz-6-diesmal-ichliebedich.html

[2] https://www.kas.de/documents/252038/7995358/Die+Auswirkungen+von+COVID-19+auf+Cyberkriminalit%C3%A4t+und+staatliche+Cyberaktivit%C3%A4ten.pdf/8ecf7084-704b-6810-4374-5840a6954b9f?version=1.0&t=1591354253482

[3] https://hpi.de/news/jahrgaenge/2020/die-beliebtesten-deutschen-passwoerter-2020-platz-6-diesmal-ichliebedich.html#:~:text=Das%20Hasso%2DPlattner%2DInstitut%20(,sind%20und%202020%20geleakt%20wurden.

[4] https://www.bsi.bund.de/SharedDocs/Downloads/DE/BSI/Grundschutz/Kompendium/IT_Grundschutz_Kompendium_Edition2020.pdf – Section ORP.4.A8

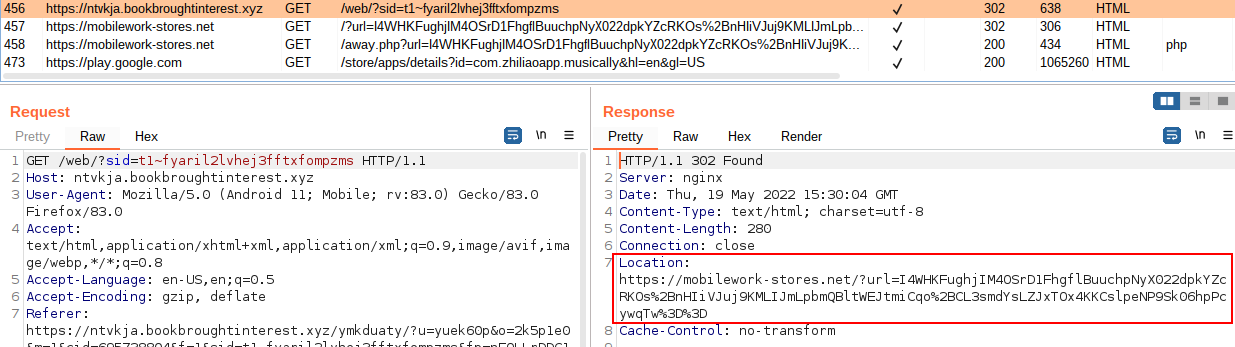

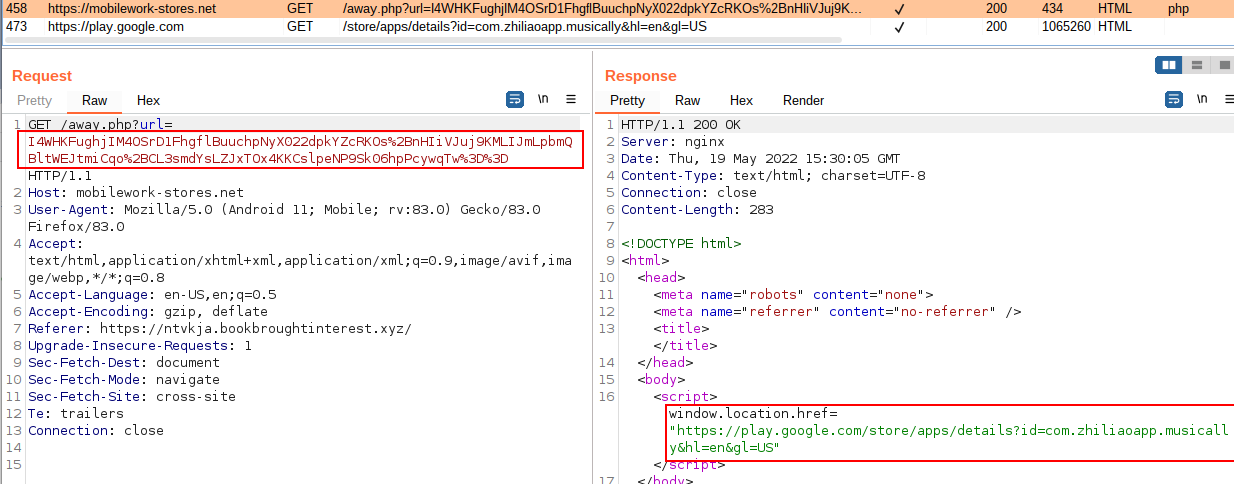

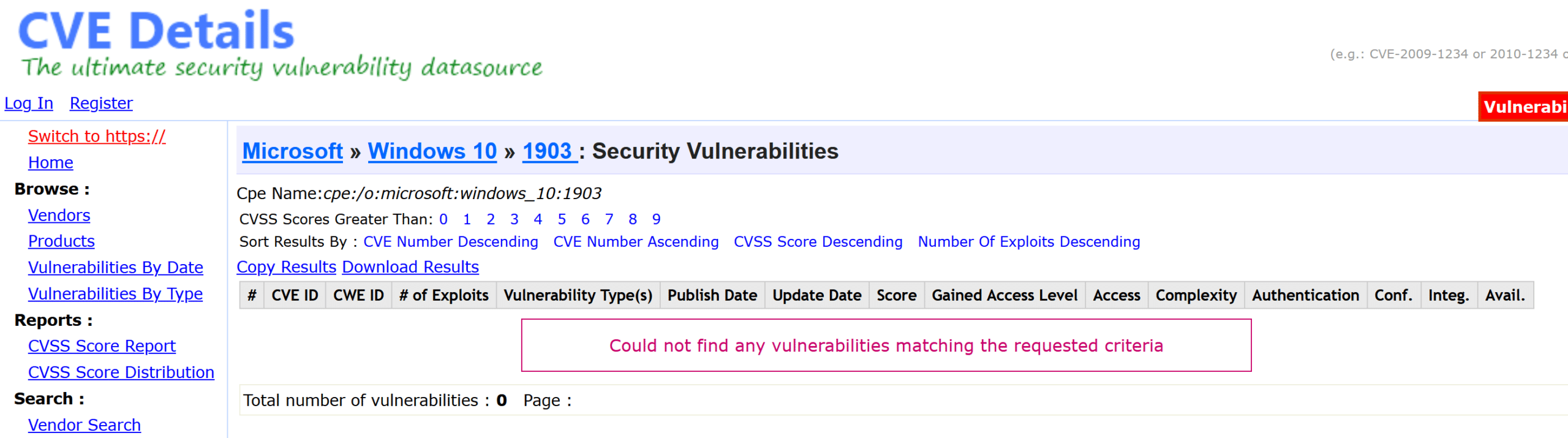

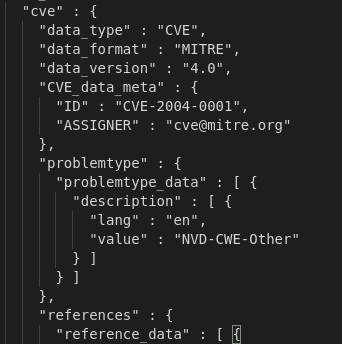

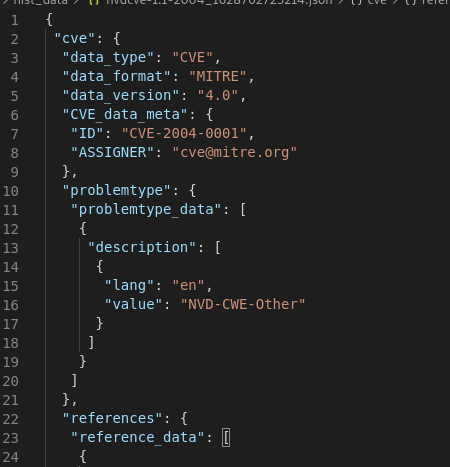

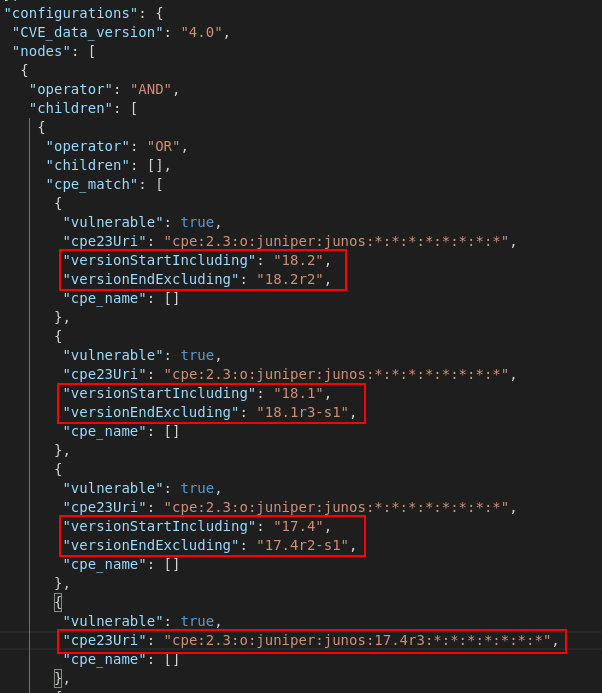

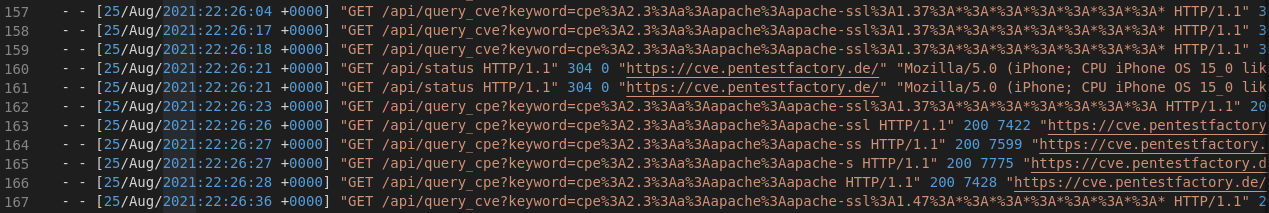

Figure: Incorrect vulnerability results for Windows 10

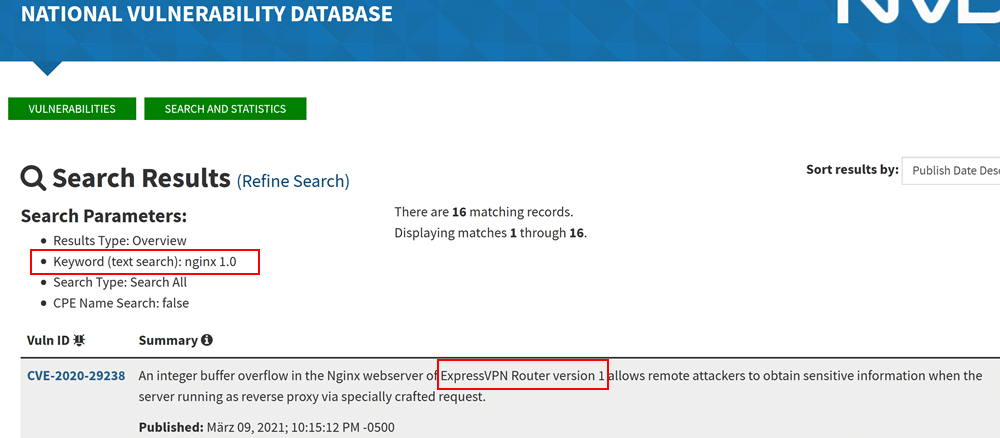

Figure: Incorrect vulnerability results for Windows 10 Figure: Keyword search returns a different product than the originally searched for product

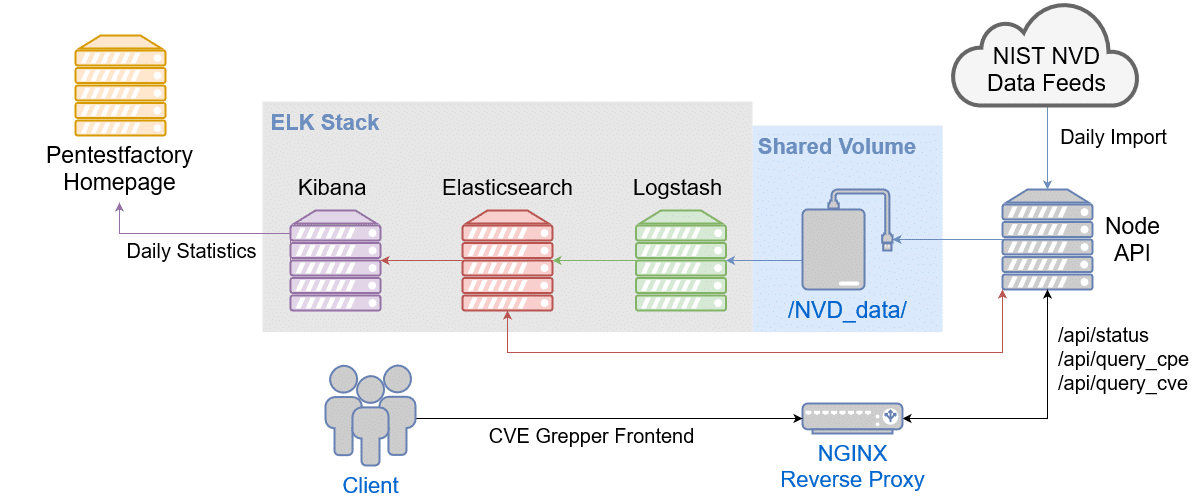

Figure: Keyword search returns a different product than the originally searched for product Figure: Overview of the technical components of the vulnerability database

Figure: Overview of the technical components of the vulnerability database

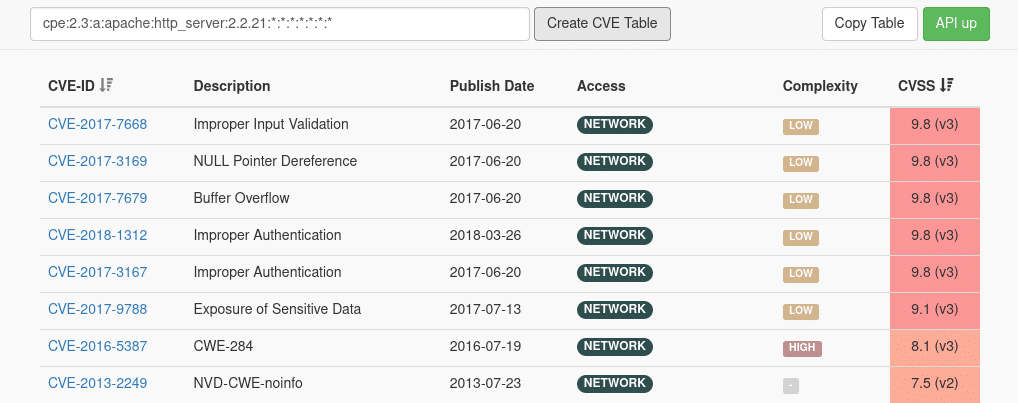

Figure: Preview of our vulnerability query frontend

Figure: Preview of our vulnerability query frontend

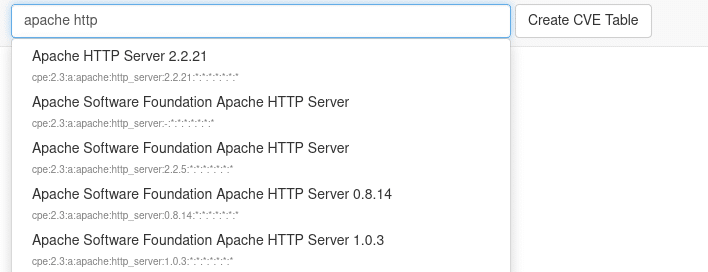

Figure: Autocomplete mechanism of the query frontend

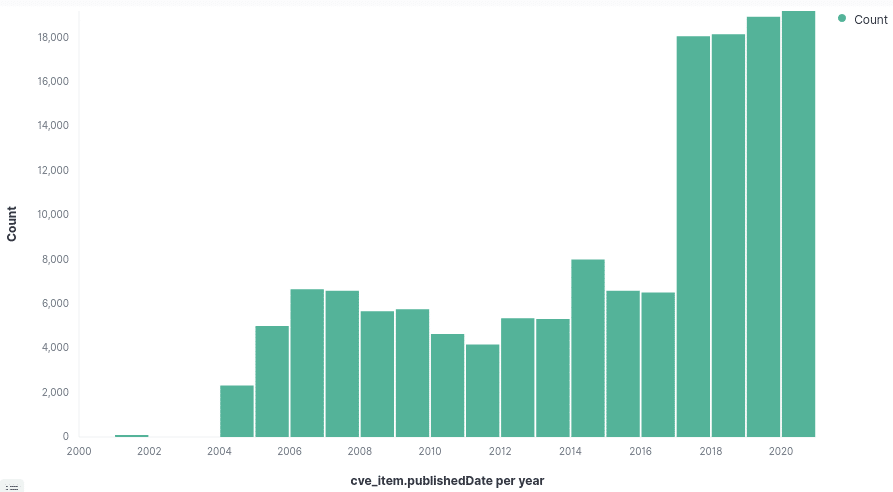

Figure: Autocomplete mechanism of the query frontend Figure: Amount of registered vulnerabilities per year

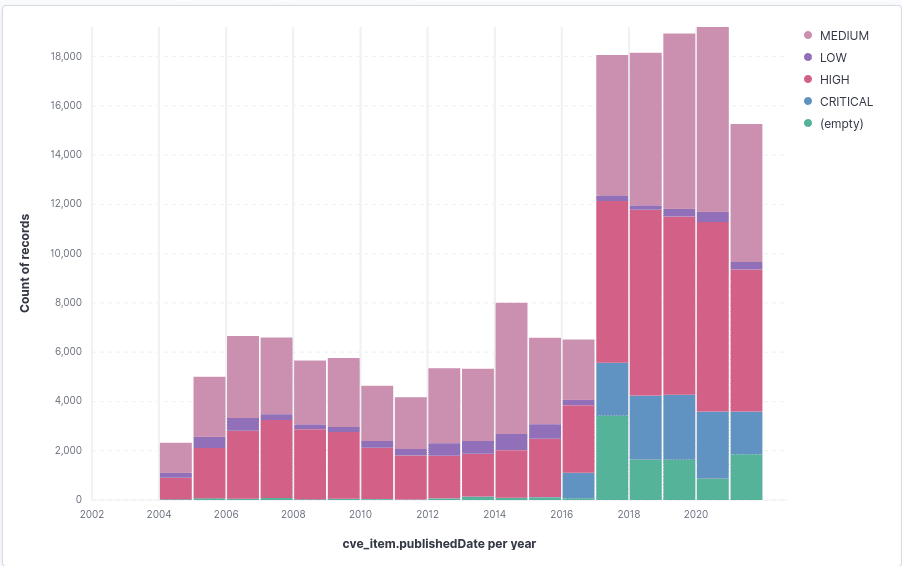

Figure: Amount of registered vulnerabilities per year Figure: Fractions of the respective risk severity groups per year

Figure: Fractions of the respective risk severity groups per year

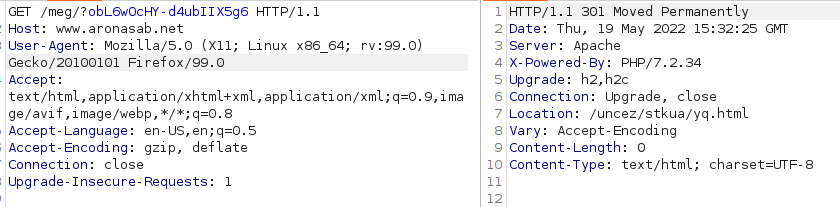

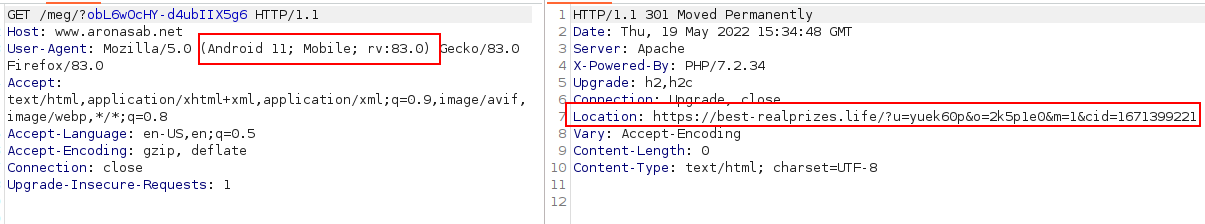

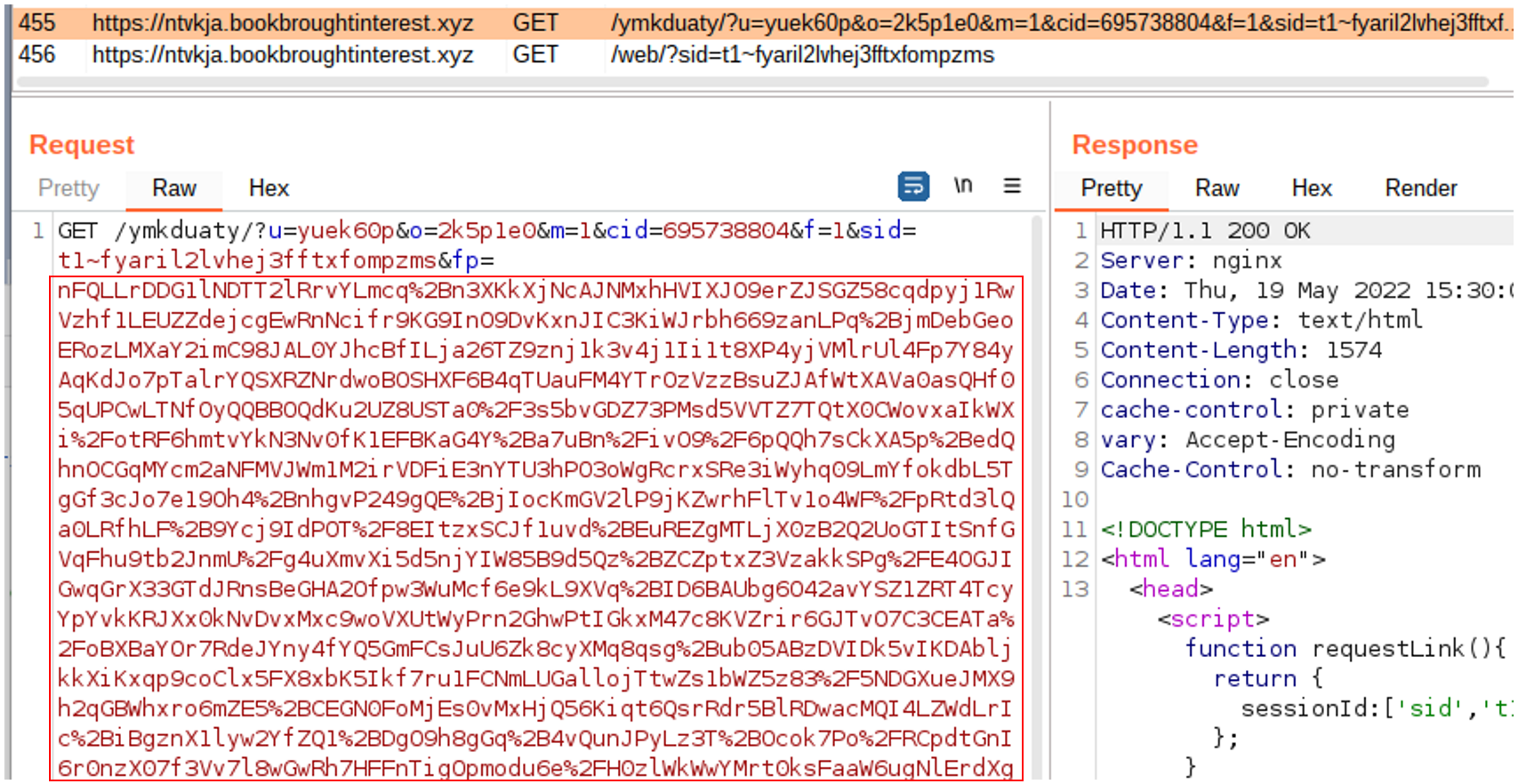

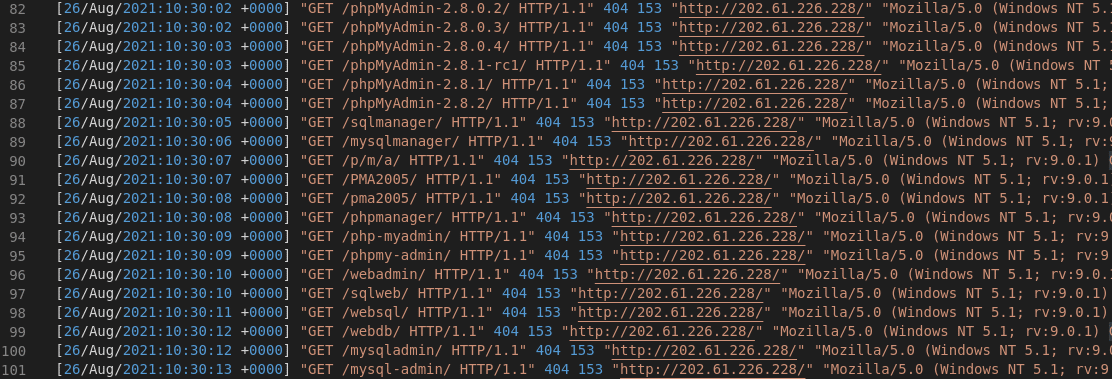

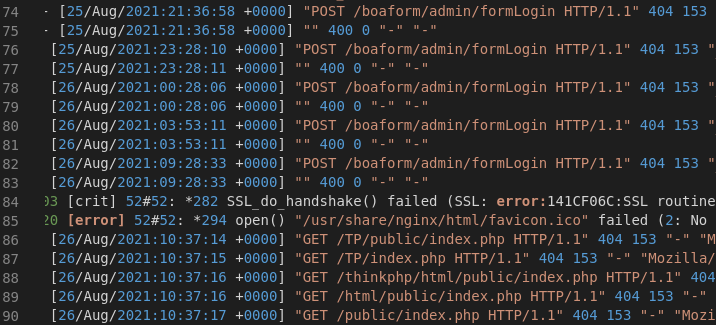

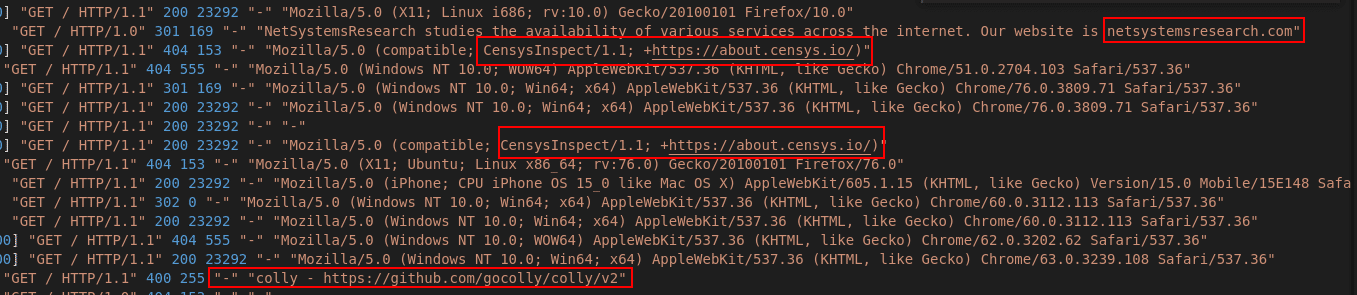

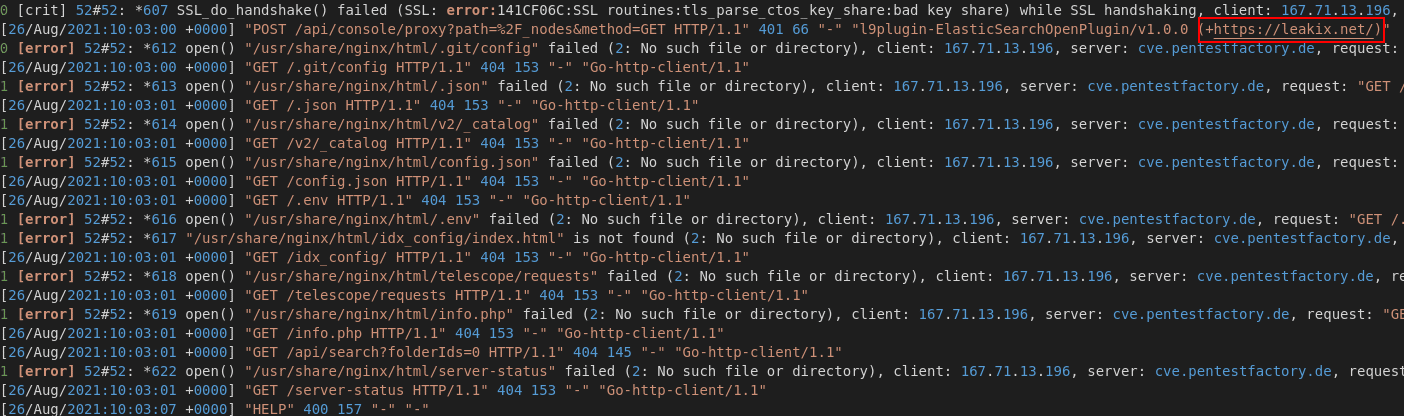

Figure 1: Sample of legitimate requests to the webserver

Figure 1: Sample of legitimate requests to the webserver

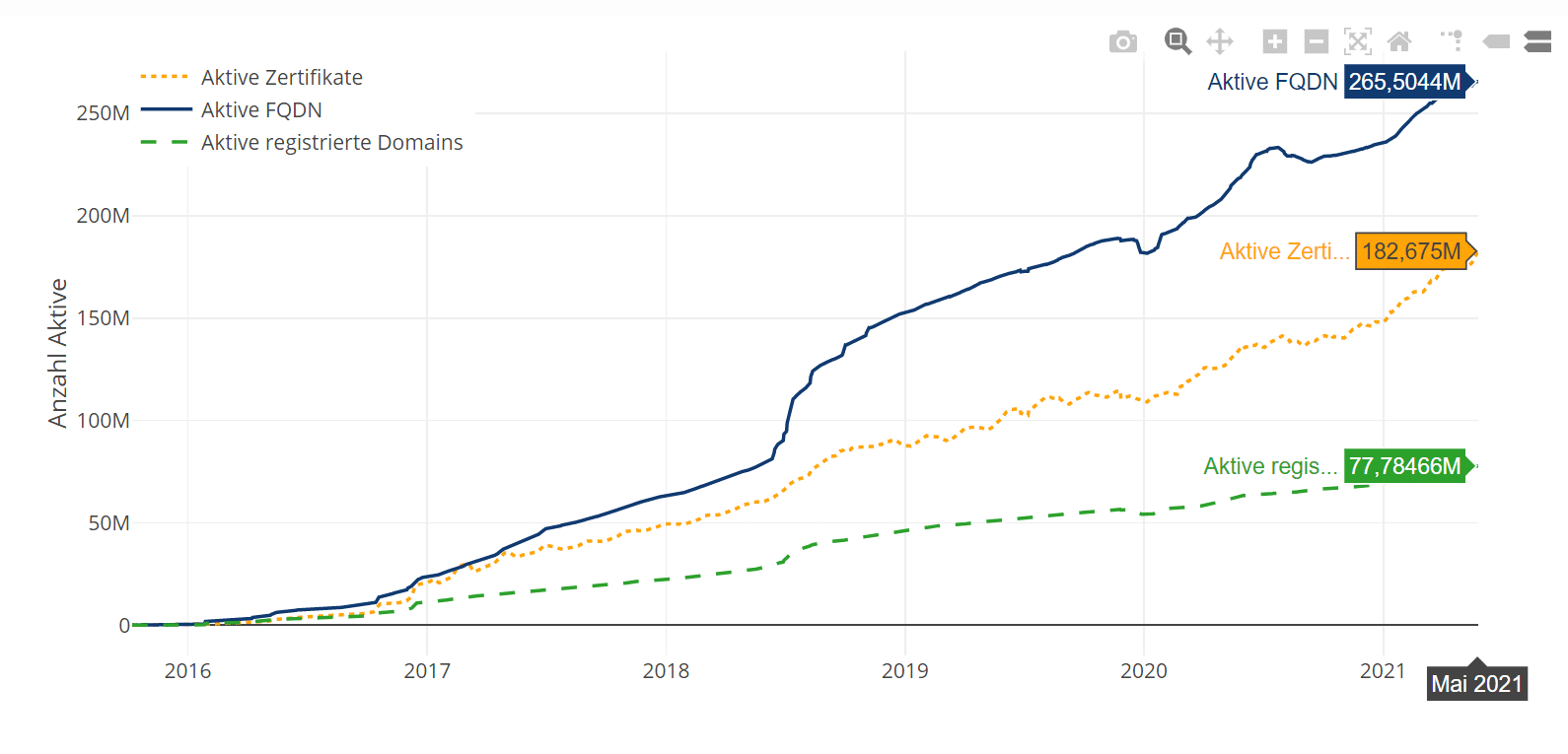

Growth of Let’s Encrypt

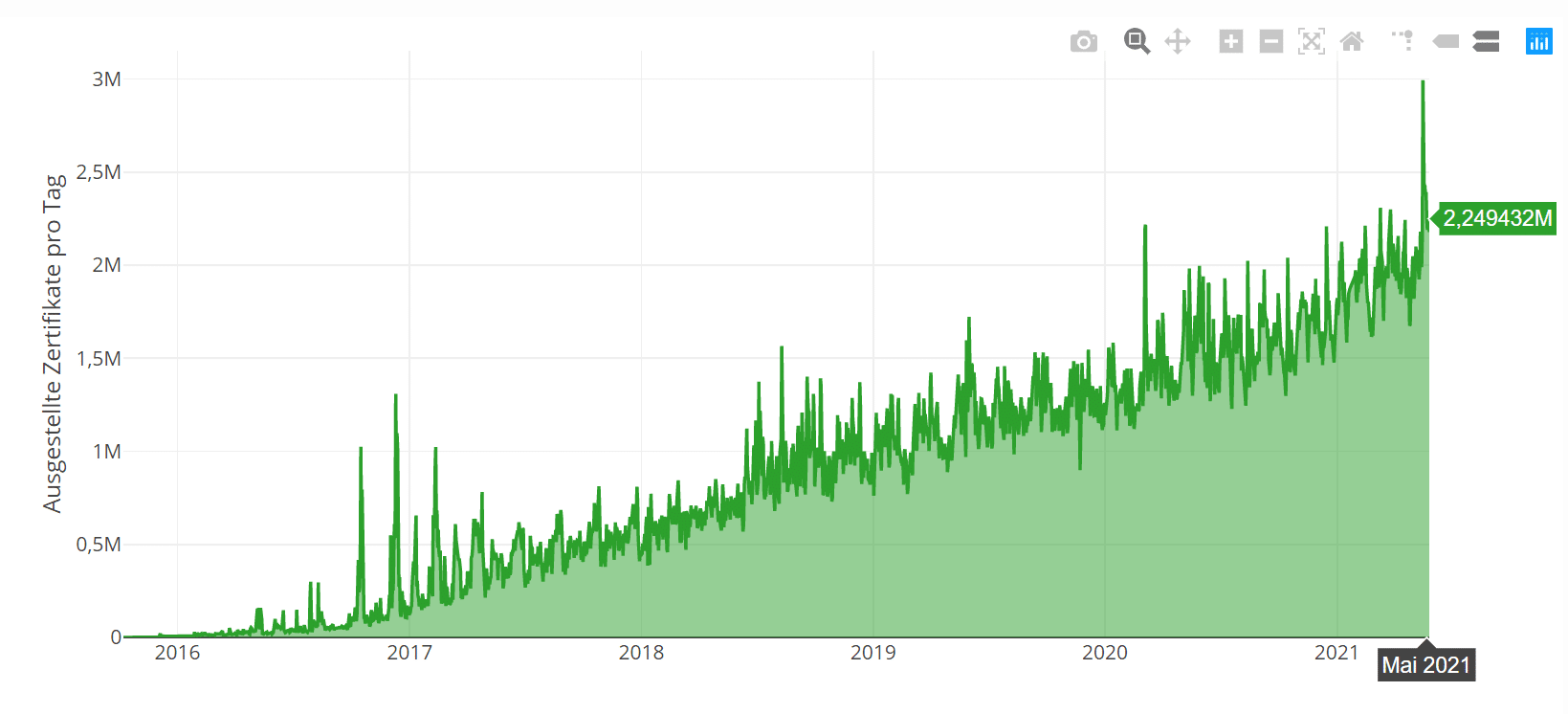

Growth of Let’s Encrypt Let’s Encrypt certificates issued per day

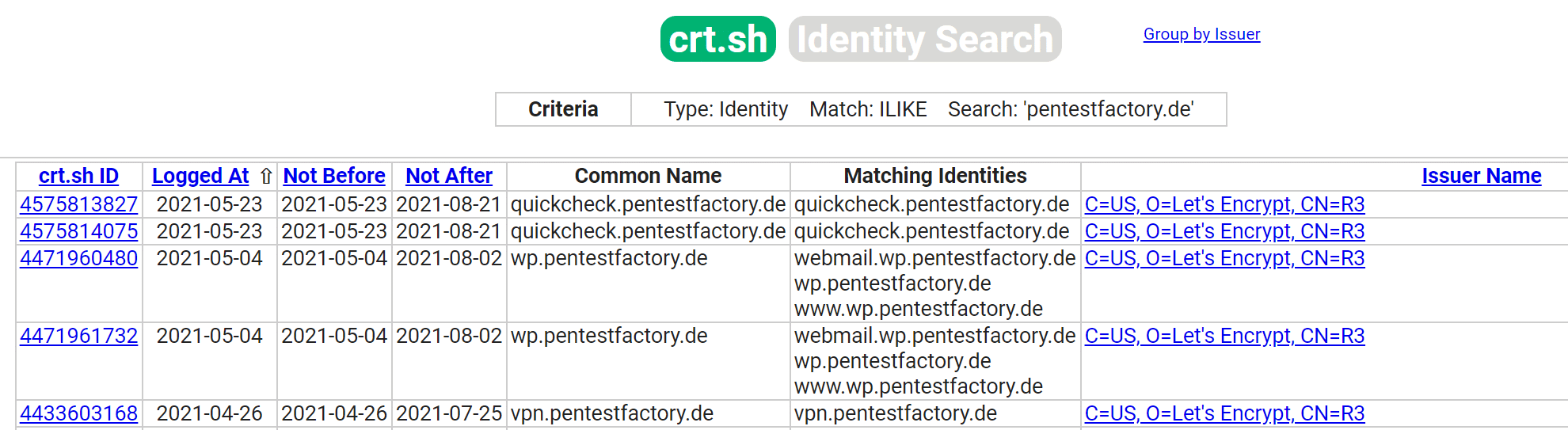

Let’s Encrypt certificates issued per day Sample excerpt from public CT logs of the domain pentestfactory.de

Sample excerpt from public CT logs of the domain pentestfactory.de